39 learning with less labels

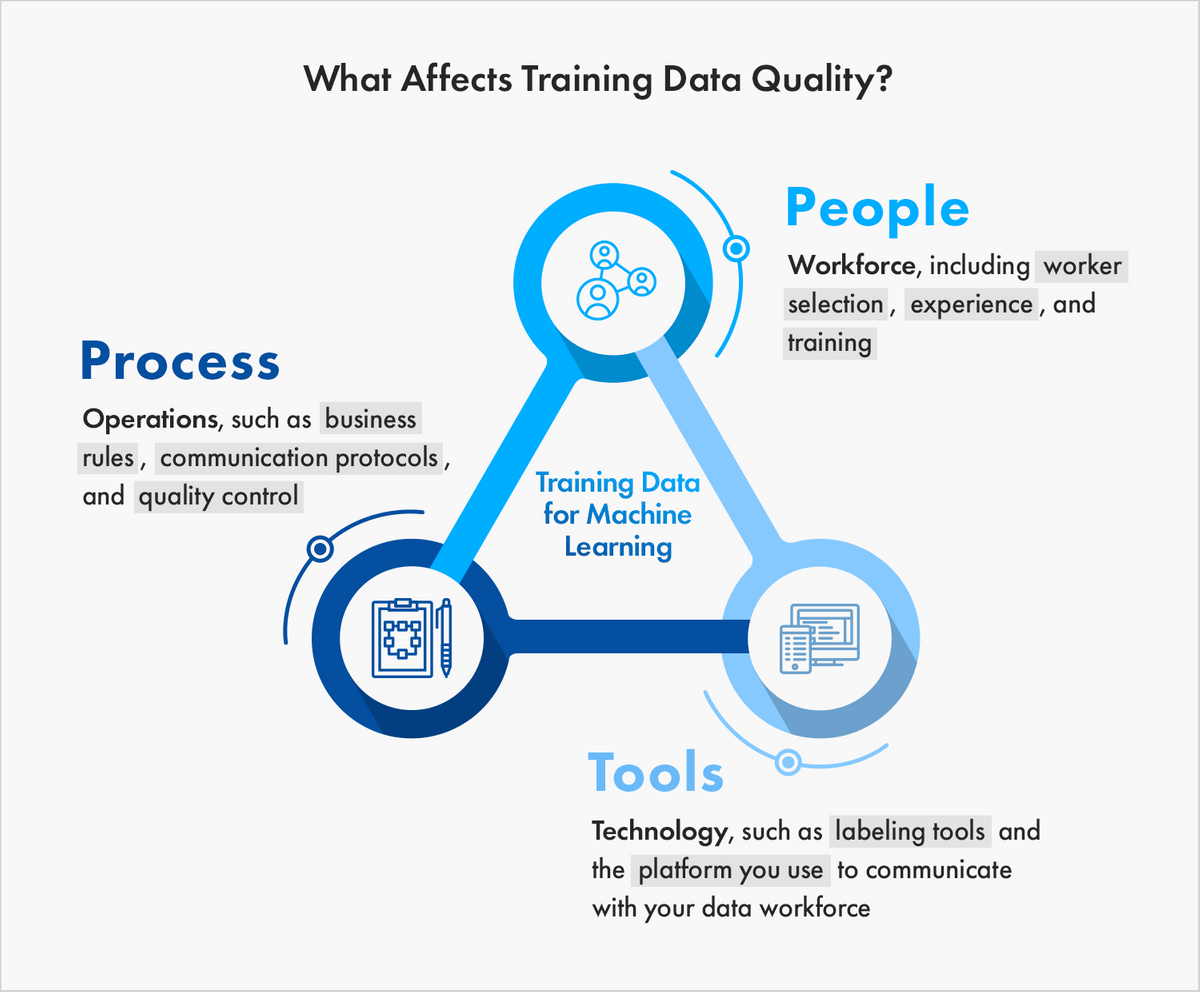

What Is Data Labeling in Machine Learning? - Label Your Data In machine learning, a label is added by human annotators to explain a piece of data to the computer. This process is known as data annotation and is necessary to show the human understanding of the real world to the machines. Data labeling tools and providers of annotation services are an integral part of a modern AI project. › howto › howto_css_labelsHow To Create Labels - W3Schools W3Schools offers free online tutorials, references and exercises in all the major languages of the web. Covering popular subjects like HTML, CSS, JavaScript, Python, SQL, Java, and many, many more.

Better reliability of deep learning classification models by exploiting ... When both scores are high and the original model (e.g. breed) predicts a label, that matches the plausibility prediction (e.g. animal), we can assume that the prediction is correct. Example: 0.98 Dog / 0.89 Akita When both scores are low, we know that the result is not reliable or there is simple something else on the image.

Learning with less labels

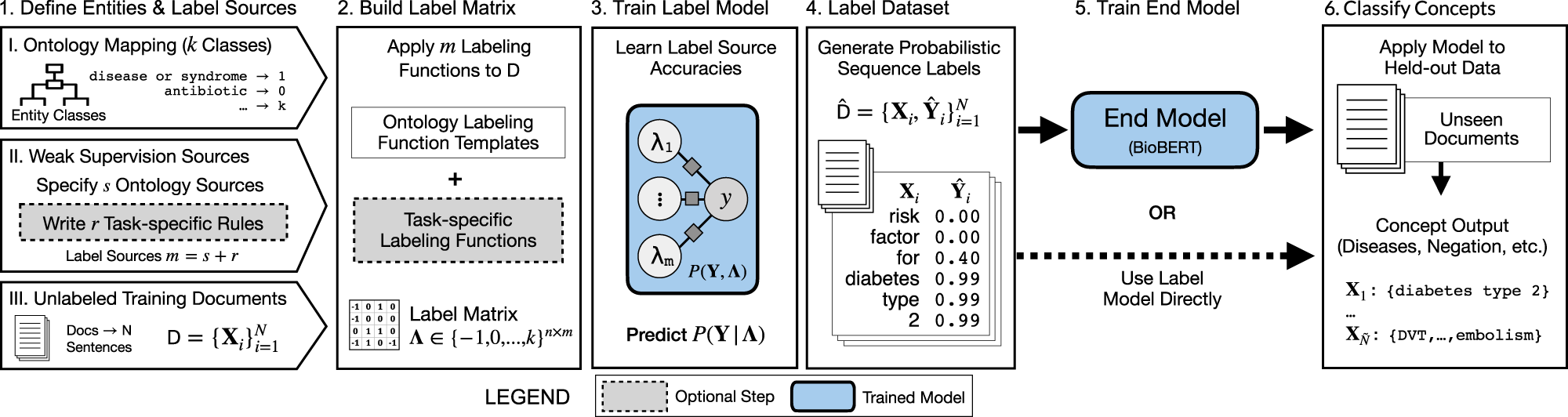

No labels? No problem!. Machine learning without labels using… | by ... from snorkel.labeling import labeling_function @labeling_function() def ccs(x): return 1 if "use by uk public sector bodies" in x.desc.lower() else -1. Great we have just created our first label function! We now build up a number of other functions which will help separate frameworks from contracts. Tips on creating effective labelling functions Learning with Less Labels and Imperfect Data | MICCAI 2020 - hvnguyen For these reasons, machine learning researchers often rely on domain experts to label the data. This process is expensive and inefficient, therefore, often unable to produce a sufficient number of labels for deep networks to flourish. Second, to make the matter worse, medical data are often noisy and imperfect. LwFLCV: Learning with Fewer Labels in Computer Vision This special issue focuses on learning with fewer labels for computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, and many others and the topics of interest include (but are not limited to) the following areas: • Self-supervised learning methods • New methods for few-/zero-shot learning

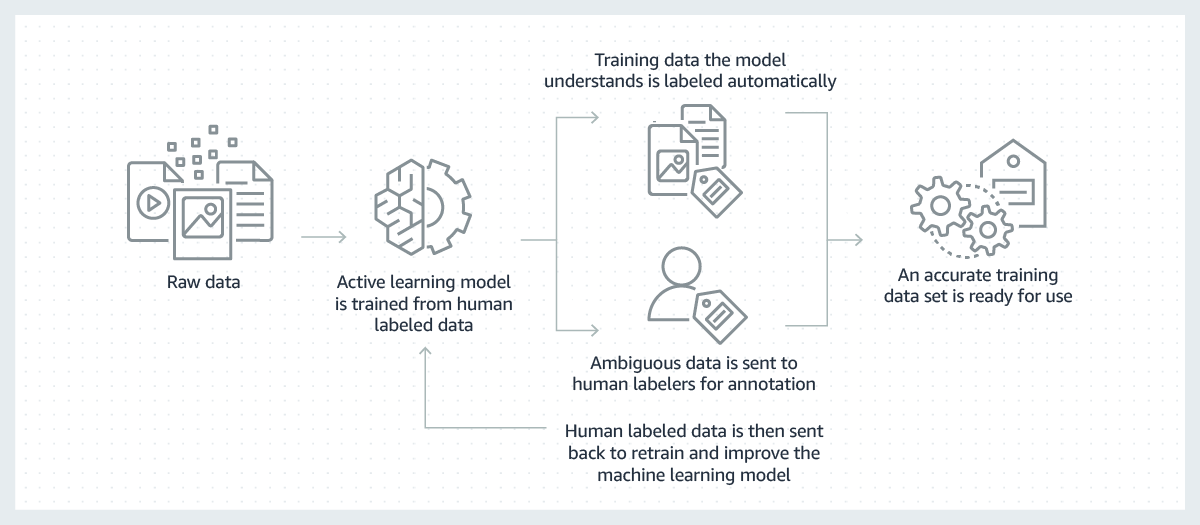

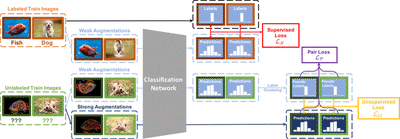

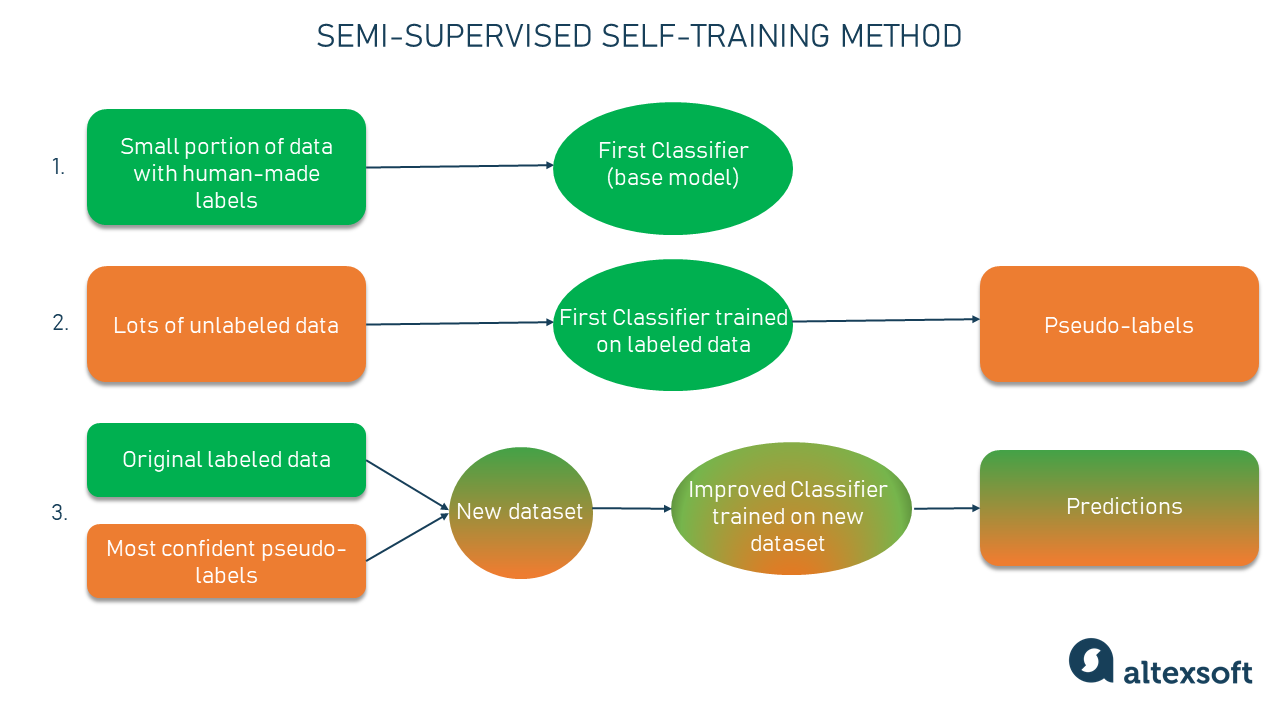

Learning with less labels. Less Labels, More Efficiency: Charles River Analytics Develops New ... Charles River Analytics Inc., developer of intelligent systems solutions, has received funding from the Defense Advanced Research Projects Agency (DARPA) as part of the Learning with Less Labels program. This program is focused on making machine-learning models more efficient and reducing the amount of labeled data required to build models. Less Labels, More Learning | AI News & Insights It learns from a small set of labeled images in typical supervised fashion. It learns from unlabeled images as follows: FixMatch modifies unlabeled examples with a simple horizontal or vertical translation, horizontal flip, or other basic translation. The model classifies these weakly augmented images. Learning with Less Labeling (LwLL) | Zijian Hu The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples. ... Learning With Less Labels (lwll) - beastlasopa Learning with Less Labels (LwLL). The city is also part of a smaller called, as well as 's region.Incorporated in 1826 to serve as a, Lowell was named after, a local figure in the. The city became known as the cradle of the, due to a large and factories. Many of the Lowell's historic manufacturing sites were later preserved by the to create.

Learning with less labels in Digital Pathology via Scribble Supervision ... Download Citation | Learning with less labels in Digital Pathology via Scribble Supervision from natural images | A critical challenge of training deep learning models in the Digital Pathology (DP ... [PDF] Learning with Less Labels in Digital Pathology via Scribble ... It is demonstrated that scribble labels from NI domain can boost the performance of DP models on two cancer classification datasets and yield the same performance boost as full pixel-wise segmentation labels despite being significantly easier and faster to collect. A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical ... Learning with Less Labels Imperfect Data | Hien Van Nguyen For these reasons, machine learning researchers often rely on domain experts to label the data. This process is expensive and inefficient, therefore, often unable to produce a sufficient number of labels for deep networks to flourish. Second, to make the matter worse, medical data are often noisy and imperfect. Machine learning with less than one example - TechTalks A new technique dubbed "less-than-one-shot learning" (or LO-shot learning), recently developed by AI scientists at the University of Waterloo, takes one-shot learning to the next level. The idea behind LO-shot learning is that to train a machine learning model to detect M classes, you need less than one sample per class.

DARPA Learning with Less Labels LwLL - Grant Bulletin Aug 15, 2018. Email this. DARPA Learning with Less Labels (LwLL) HR001118S0044. Abstract Due: August 21, 2018, 12:00 noon (ET) Proposal Due: October 2, 2018, 12:00 noon (ET) Proposers are highly encouraged to submit an abstract in advance of a proposal to minimize effort and reduce the potential expense of preparing an out of scope proposal. Learning with Auxiliary Less-Noisy Labels----Institute of Automation However, learning with less-accurate labels can lead to serious performance deterioration because of the high noise rate. Although several learning methods (e.g., noise-tolerant classifiers) have been advanced to increase classification performance in the presence of label noise, only a few of them take the noise rate into account and utilize ... Domain Adaptation and Representation Transfer and Medical Image ... Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data First MICCAI Workshop, DART 2019, and First International Workshop, MIL3ID 2019, Shenzhen, Held in Conjunction with MICCAI 2019, Shenzhen, China, October 13 and 17, 2019, Proceedings Editors: Qian Wang, Fausto Milletari, Hien V. Nguyen, [2201.02627] Learning with Less Labels in Digital Pathology via ... One potential weakness of relying on class labels is the lack of spatial information, which can be obtained from spatial labels such as full pixel-wise segmentation labels and scribble labels. We demonstrate that scribble labels from NI domain can boost the performance of DP models on two cancer classification datasets (Patch Camelyon Breast Cancer and Colorectal Cancer dataset).

Learning With Less Labels - YouTube About Press Copyright Contact us Creators Advertise Developers Terms Privacy Policy & Safety How YouTube works Test new features Press Copyright Contact us Creators ...

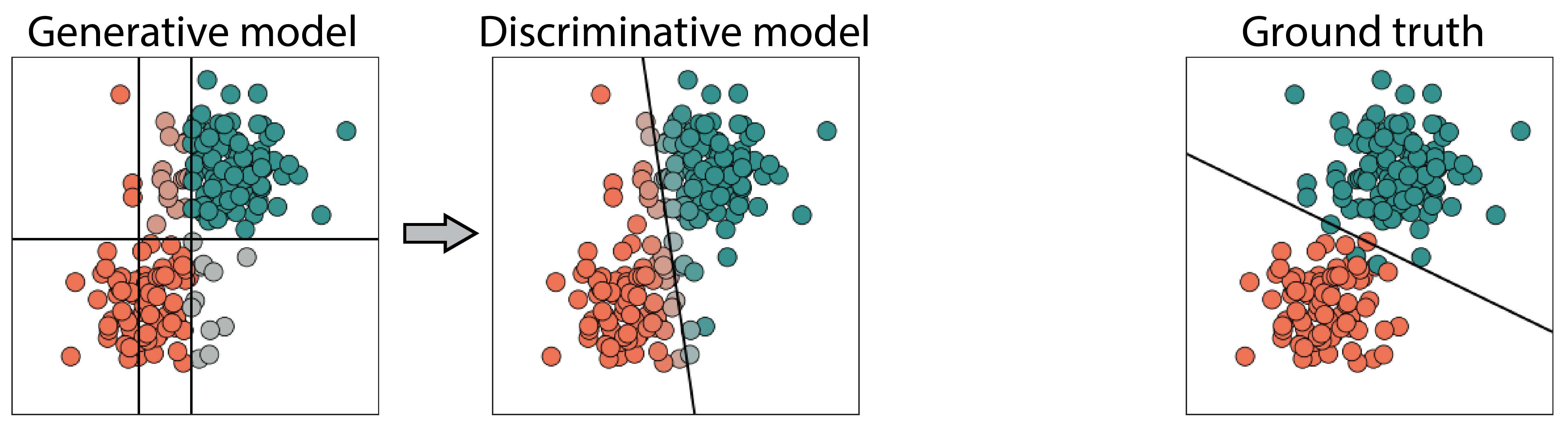

› tutorials › machine-learningClassification in Machine Learning: What it is and ... Aug 23, 2022 · This is also how Supervised Learning works with machine learning models. In Supervised Learning, the model learns by example. Along with our input variable, we also give our model the corresponding correct labels. While training, the model gets to look at which label corresponds to our data and hence can find patterns between our data and those ...

Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data (Lecture Notes in Computer Science)

machinelearningmastery.com › multi-labelMulti-Label Classification with Deep Learning Aug 30, 2020 · Multi-label classification involves predicting zero or more class labels. Unlike normal classification tasks where class labels are mutually exclusive, multi-label classification requires specialized machine learning algorithms that support predicting multiple mutually non-exclusive classes or “labels.” Deep learning neural networks are an example of an algorithm that natively supports ...

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2017 - Learning with Auxiliary Less-Noisy Labels. 2018-AAAI - Deep learning from crowds. 2018-ICLR - mixup: Beyond Empirical Risk Minimization. 2018-ICLR - Learning From Noisy Singly-labeled Data. 2018-ICLR_W - How Do Neural Networks Overcome Label Noise?. 2018-CVPR - CleanNet: Transfer Learning for Scalable Image Classifier Training with Label ...

dtc.ucsf.edu › learning-to-read-labelsLearning To Read Labels :: Diabetes Education Online Remember, when you are learning to count carbohydrates, measure the exact serving size to help train your eye to see what portion sizes look like. When, for example, the serving size is 1 cup, then measure out 1 cup. If you measure out a cup of rice, then compare that to the size of your fist.

Learning with Less Labels in Digital Pathology via Scribble Supervision ... One potential weakness of relying on class labels is the lack of spatial information, which can be obtained from spatial labels such as full pixel-wise segmentation labels and scribble labels. We demonstrate that scribble labels from NI domain can boost the performance of DP models on two cancer classification datasets (Patch Camelyon Breast Cancer and Colorectal Cancer dataset).

artbizsuccess.com › wall-labelsGuidelines for Making Wall Labels for Your Art Exhibition Aug 08, 2019 · Labels within an exhibition should all be the same size unless there is need for longer, explanatory text. Place object labels to the right if at all possible. Large sculpture may require that you place a label on the nearest wall or floor. Hang all labels at the same height and use a level to make sure they are parallel to the floor.

Learning with less labels in Digital Pathology via Scribble Supervision ... Learning with less labels in Digital Pathology via Scribble Supervision from natural images. Click To Get Model/Code. A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. One way to tackle this issue is via transfer learning from the natural image domain (NI ...

Learning With Auxiliary Less-Noisy Labels | Semantic Scholar A learning method, in which not only noisy labels but also auxiliary less-noisy labels, which are available in a small portion of the training data, are taken into account, and the proposed method is tolerant to label noise, and outperforms classifiers that do not explicitly consider the Auxiliary less- noisy labels. Obtaining a sufficient number of accurate labels to form a training set for ...

towardsdatascience.com › performance-metrics-inPerformance Metrics in Machine Learning — Part 3: Clustering Jan 31, 2021 · The only drawback of Rand Index is that it assumes that we can find the ground-truth clusters labels and use them to compare the performance of our model, so it is much less useful than the Silhouette Score for pure Unsupervised Learning tasks. To calculate the Rand Index: sklearn.metrics.rand_score(labels_true, labels_pred) Adjusted Rand Index

Paper tables with annotated results for Learning with Less Labels in ... Learning with Less Labels in Digital Pathology via Scribble Supervision from Natural Images . A critical challenge of training deep learning models in the Digital Pathology (DP) domain is the high annotation cost by medical experts. One way to tackle this issue is via transfer learning from the natural image domain (NI), where the annotation ...

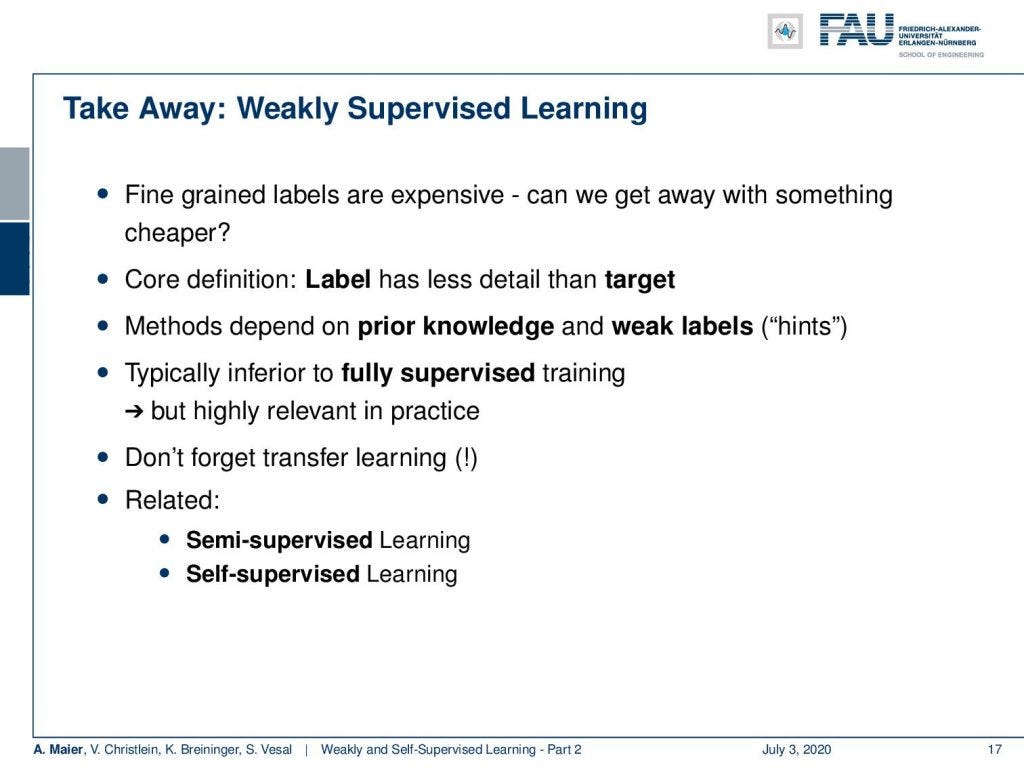

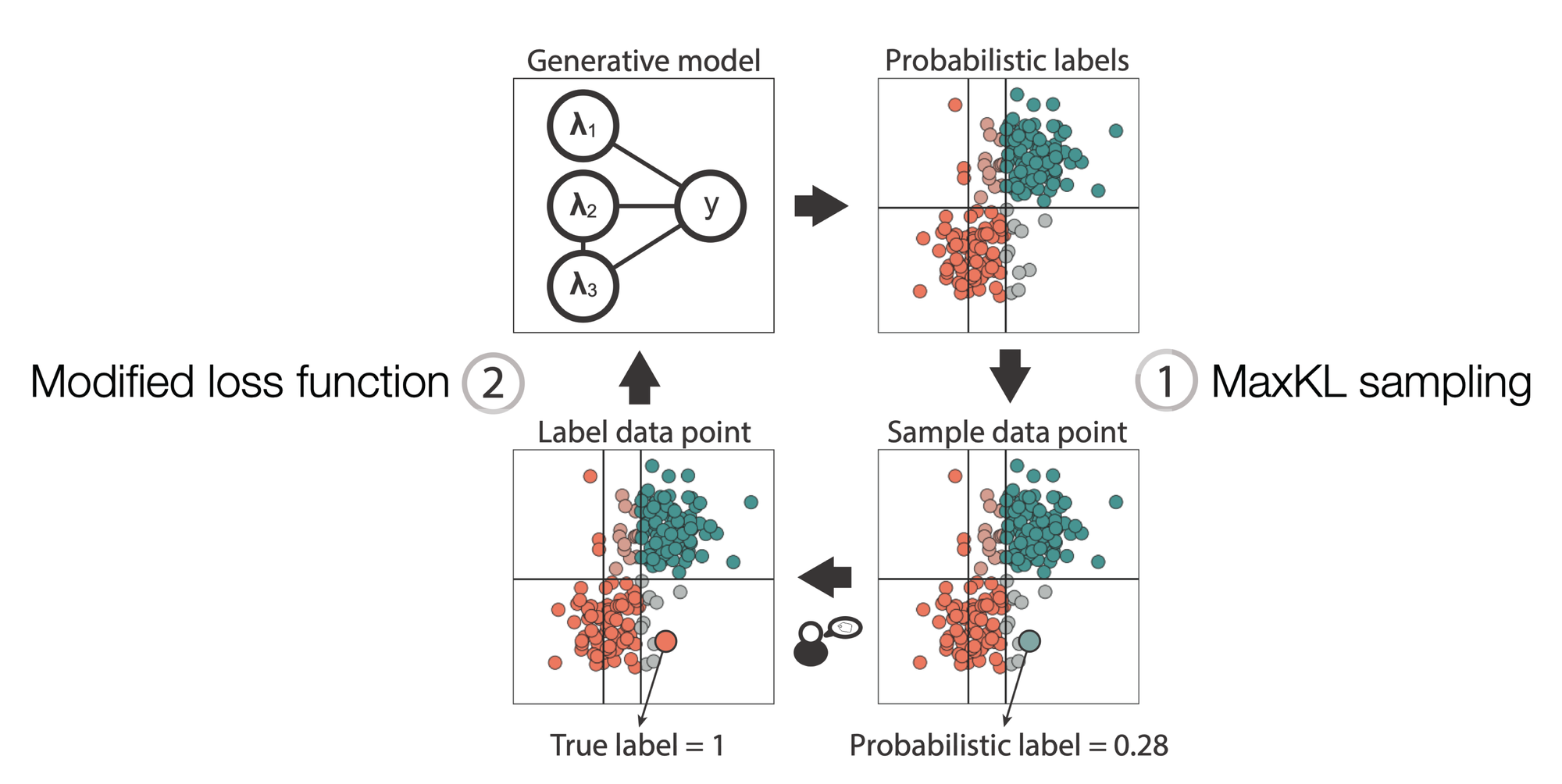

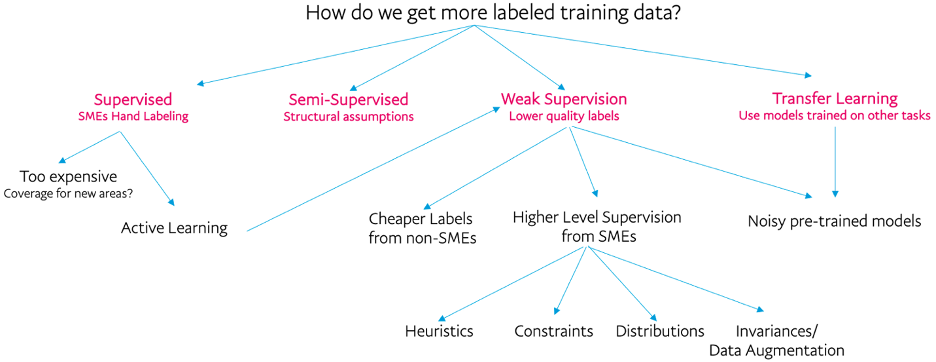

Learning with Limited Labeled Data, ICLR 2019 Increasingly popular approaches for addressing this labeled data scarcity include using weak supervision---higher-level approaches to labeling training data that are cheaper and/or more efficient, such as distant or heuristic supervision, constraints, or noisy labels; multi-task learning, to effectively pool limited supervision signal; data ...

Learning With Auxiliary Less-Noisy Labels | IEEE Journals & Magazine ... Obtaining a sufficient number of accurate labels to form a training set for learning a classifier can be difficult due to the limited access to reliable label resources. Instead, in real-world applications, less-accurate labels, such as labels from nonexpert labelers, are often used. However, learning with less-accurate labels can lead to serious performance deterioration because of the high ...

Learning with Less Labeling - DARPA The Learning with Less Labeling (LwLL) program aims to make the process of training machine learning models more efficient by reducing the amount of labeled data required to build a model by six or more orders of magnitude, and by reducing the amount of data needed to adapt models to new environments to tens to hundreds of labeled examples.

Learning With Auxiliary Less-Noisy Labels - PubMed Although several learning methods (e.g., noise-tolerant classifiers) have been advanced to increase classification performance in the presence of label noise, only a few of them take the noise rate into account and utilize both noisy but easily accessible labels and less-noisy labels, a small amount of which can be obtained with an acceptable added time cost and expense.

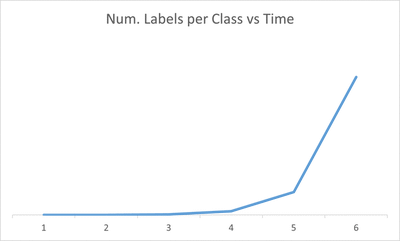

Pro Tips: How to deal with Class Imbalance and Missing Labels Any of these classifiers can be used to train the malware classification model. Class Imbalance. As the name implies, class imbalance is a classification challenge in which the proportion of data from each class is not equal. The degree of imbalance can be minor, for example, 4:1, or extreme, like 1000000:1.

› us › enBarcode Labels and Tags | Zebra With more than 400 stocked ZipShip paper and synthetic labels and tags – all ready to ship within 24 hours – Zebra has the right label and tag on hand for your application. From synthetic materials to basic paper solutions, custom to compliance requirements, hard-to-label surfaces to easy-to-remove labels, or tamper-evident to tear-proof ...

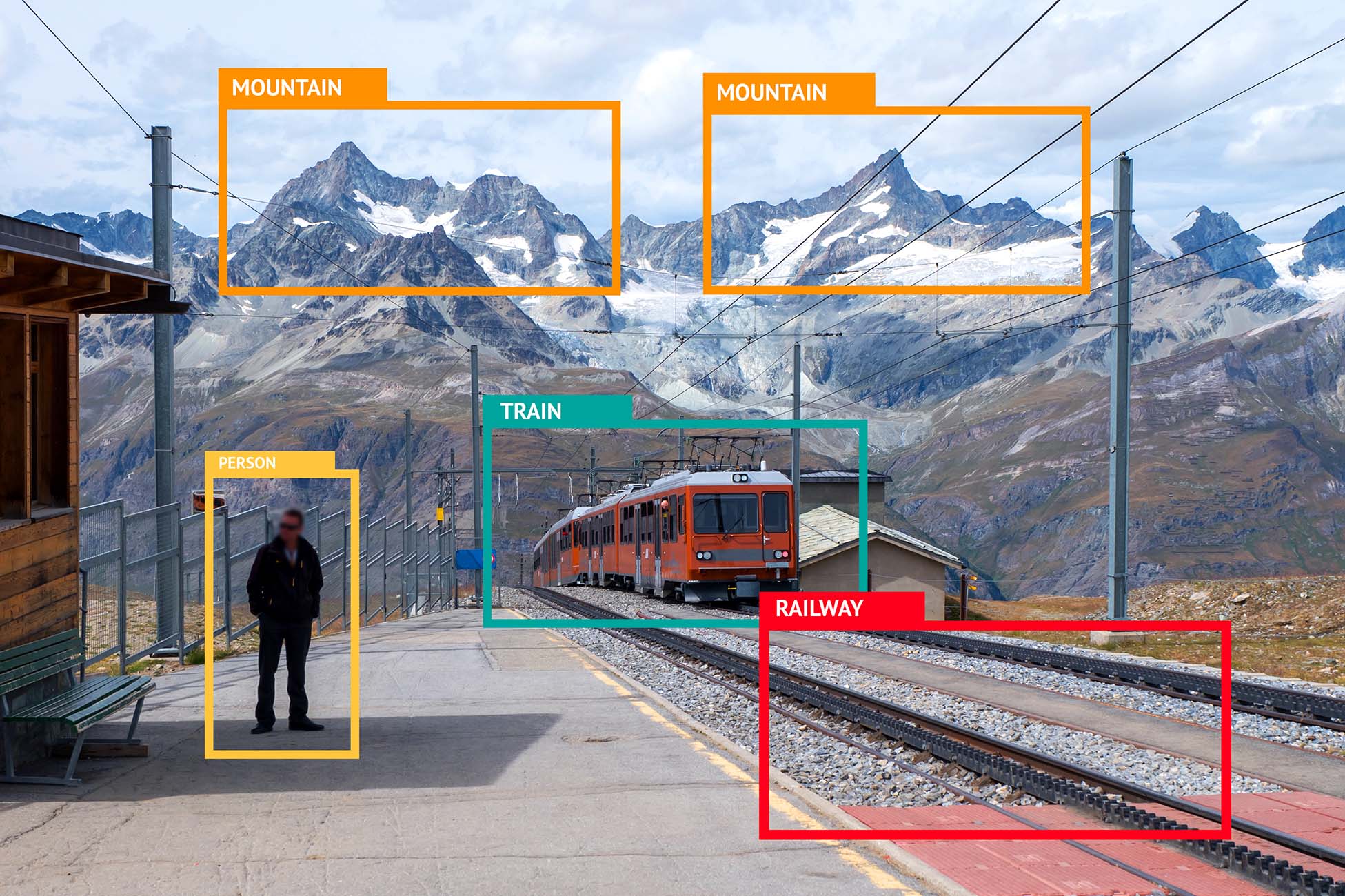

LwFLCV: Learning with Fewer Labels in Computer Vision This special issue focuses on learning with fewer labels for computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, and many others and the topics of interest include (but are not limited to) the following areas: • Self-supervised learning methods • New methods for few-/zero-shot learning

Learning with Less Labels and Imperfect Data | MICCAI 2020 - hvnguyen For these reasons, machine learning researchers often rely on domain experts to label the data. This process is expensive and inefficient, therefore, often unable to produce a sufficient number of labels for deep networks to flourish. Second, to make the matter worse, medical data are often noisy and imperfect.

No labels? No problem!. Machine learning without labels using… | by ... from snorkel.labeling import labeling_function @labeling_function() def ccs(x): return 1 if "use by uk public sector bodies" in x.desc.lower() else -1. Great we have just created our first label function! We now build up a number of other functions which will help separate frameworks from contracts. Tips on creating effective labelling functions

![Google DeepMind: Representation Learning Without Labels- Part 1 [ICML Tutorial]](https://i.ytimg.com/vi/OK6TJIS1RZ0/maxresdefault.jpg)

![PDF] Image Classification with Deep Learning in the Presence ...](https://d3i71xaburhd42.cloudfront.net/33a2e0c7ea17031f4e6f28496a9b8f3222cb2904/4-TableI-1.png)

![What Is Transfer Learning? [Examples & Newbie-Friendly Guide]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/616b35e31e352613b291f4c7_P46neY1rhMd5NbTxhCbaWWAnwJwhgCZjSLp2C2-6Pzf8sBEfAxtlhnAOV_Jq_gX-zOaztDWLrtFal42V-EDr86Gcd8QYrWh4uMxZ-_-X_Pd5tOge9EkBmFr7UxrEWLMwCNZi14WK%3Ds0.jpeg)

![What Is Data Labelling and How to Do It Efficiently [2022]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/60d9ab454dc7ad70f8c5d860_supervised-learning-vs-unsupervised-learning.png)

![What Is Transfer Learning? [Examples & Newbie-Friendly Guide]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/627d125248f5fa07e1faf0c6_61f54fb4bbd0e14dfe068c8f_transfer-learned-knowledge.png)

Post a Comment for "39 learning with less labels"